|

AI Meets Streaming: Build Real-Time Architectures with AWS + Redpanda (Sponsored)

Join us live on December 11 for a Redpanda Tech Talk with AWS experts on how to bring Agentic and Generative AI into real-time data pipelines. Redpanda Solutions Engineer Garrett Raska and AWS Partner Solutions Architect Dr. Art Sedighi will walk through emerging AI patterns from AWS re:Invent and show how to integrate AI inference directly into streaming architectures. Learn how to build low-latency, context-aware applications, combine real-time signals with GenAI models, and architect reliable, production-ready AI workflows. If you’re exploring how AI transforms streaming systems, this session delivers the patterns you need to get started.

Disclaimer: The details in this post have been derived from the details shared online by the DoorDash Engineering Team. All credit for the technical details goes to the DoorDash Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

In mid-2021, DoorDash experienced a production outage that brought down the entire platform for more than two hours.

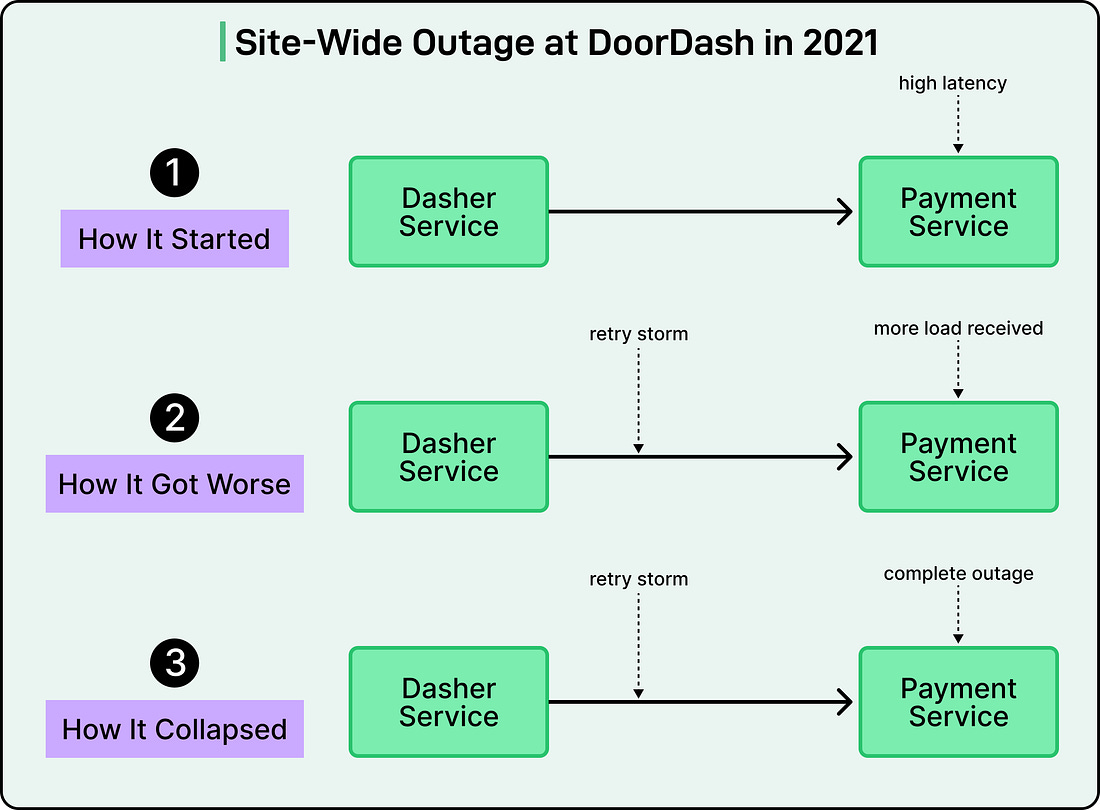

The incident started with the payment service experiencing high latency. Clients of this service interpreted the slow responses as potential failures and retried their requests. This created a retry storm where each retry added more load to an already overwhelmed service. The cascading failure spread through DoorDash’s microservices architecture as services depending on payments started timing out and failing.

See the diagram below:

This wasn’t an isolated incident. DoorDash had experienced a series of similar issues earlier as well. The problems may have been prompted by their transition from a monolith to a microservices architecture between 2019 and 2023.

Of course, it wasn’t that DoorDash was blind to reliability concerns. The team had already implemented several reliability features in their primary Kotlin-based services. However, not all services used Kotlin, which meant they either had to build their own mechanisms or go without. The payment service was also one of them.

The outage made one thing clear: their patchwork approach to reliability wasn’t working. The incident demonstrated that reliability features like Layer 7 metrics-aware circuit breakers and load shedding couldn’t remain the responsibility of individual application teams.

Based on this realization, the DoorDash engineering team decided to transition to a service mesh architecture to handle their traffic needs. By the time they began this adoption, DoorDash was operating at significant scale with over 1,000 microservices running across Kubernetes clusters containing around 2,000 nodes. Today, the system handles more than 80 million requests per second during peak hours.

In this article, we will look at how DoorDash’s infrastructure team went through this journey and the difficulties they faced.

Challenges of Microservices Architecture

Ever since DoorDash migrated away from the monolithic architecture, they started to encounter several classic challenges applicable to microservices and distributed systems.

Services were communicating using different patterns with no standardization across the organization:

HTTP/1 services relied on Kubernetes DNS with static virtual IPs, where iptables handled connection-level load balancing.

Newer services used Consul-based multi-cluster DNS that returned all pod IPs directly, requiring client-side load balancing that was inconsistently implemented across different programming languages and teams.

Some gRPC services were exposed through dedicated AWS Elastic Load Balancers with external traffic policy settings to route traffic correctly

Certain services routed requests through a central internal router, creating a potential bottleneck and a single point of failure

Some services made full round-trips through public DoorDash domains before hairpinning back into internal systems. This hurt both performance and reliability

See the diagram below:

Beyond these varied communication patterns, critical platform-level features were implemented inconsistently:

Authentication and authorization mechanisms differed across services and teams, with no unified approach to securing service-to-service communication.

Retry and timeout policies varied by team and programming language, with some services lacking them entirely.

Load shedding and circuit breaker implementations were scattered and inconsistent, leaving many services vulnerable to exactly the kind of cascading failures they had just experienced.

The resulting service topology had become increasingly complex, making system-wide visibility and debugging extremely difficu