|

|

What Is Ontology-Grounded Retrieval-Augmented Generation?

Discover how a semantic model of meaning makes AI answers more accurate

Technical writers keep hearing that Retrieval-Augmented Generation (RAG) makes AI answers more accurate because it grounds responses in real content. That is true — but it’s only part of the story.

RAG is often described as “LLMs plus search.” That description is incomplete — and for technical writers, potentially misleading.

Ontology-grounded RAG is a more advanced approach that anchors AI responses not just in retrieved content, but in an explicit semantic model of meaning. It ensures that AI systems retrieve and generate answers based on what things are, how they relate, and under what conditions information is valid.

If AI is becoming the public voice of our products, ontology-grounded RAG is how we keep that voice accurate, consistent, and accountable.

If you work with structured content, metadata, or controlled vocabularies, this topic is very much in your lane.

Why Standard RAG Is Insufficient

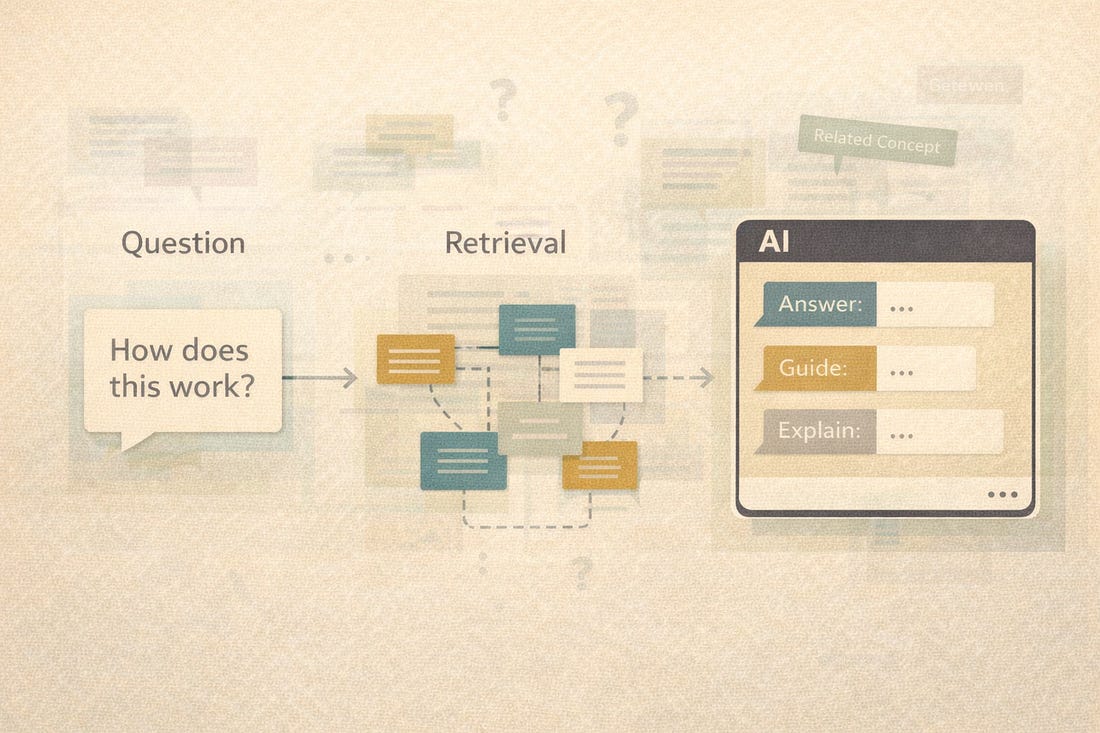

Standard RAG works like this:

A user asks a question

The system retrieves relevant chunks of content (documents, passages, topics)

An LLM generates an answer using that retrieved text as context

This improves accuracy over “pure” generative AI, but it still has limits:

Retrieval is often keyword- or embedding-based, not meaning-based

Related concepts may not be retrieved if the wording differs

The model may mix incompatible concepts or versions

There is little accountability for why something was retrieved

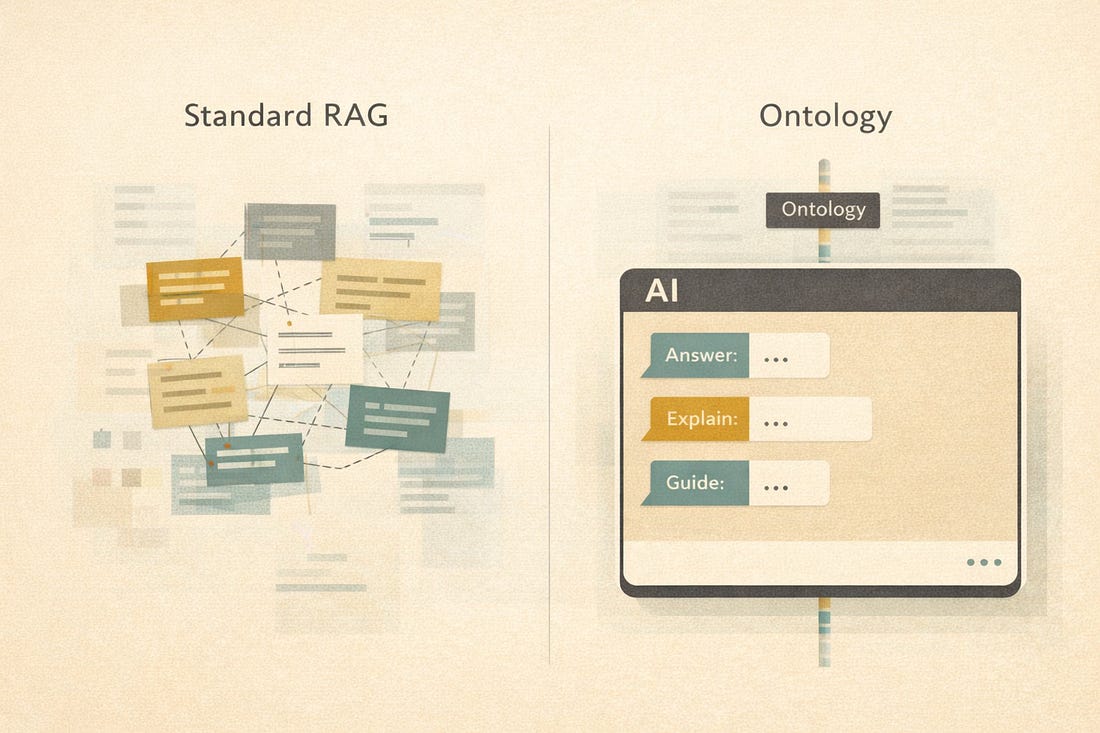

What Changes With Ontology-Grounded RAG?

Ontology-grounded RAG adds a formal knowledge model — an ontology — between your content and the AI.

An ontology explicitly defines:

Concepts (products, features, tasks, warnings, roles, versions)

Relationships (depends on, replaces, applies to, incompatible with)

Constraints (this feature only applies to this product line or version)

Synonyms and preferred terminology

Instead of asking, “Which chunks are similar to this question?” the system can ask:

“Which concepts are relevant, and what content is authoritative for those concepts in this context?”

Ontology-Grounded RAG In Plain Language

Here is the simplest way to think about it:

Standard RAG retrieves text that looks relevant

Ontology-grounded RAG retrieves content that means the right thing

The ontology acts as a semantic spine that keeps AI answers aligned with reality.

Why technical writers should care

Ontology-grounded RAG rewards practices technical writers already value:

1. Clear concept definitions

If