|

|

Welcome to Popular Information, a newsletter dedicated to accountability journalism.

AI chatbots are surging in popularity. According to OpenAI CEO Sam Altman, more than 800 million people use ChatGPT each week. Every month, hundreds of millions more use ChatGPT competitors such as Google’s Gemini (400 million), Microsoft’s Copilot (100 million), and Perplexity (15 million).

According to a recent study of American AI users, 25% report using an AI chatbot to get news, though most do so only sporadically. But these services have grown so massive that even a modest percentage of users makes AI chatbots a significant, and growing, source of news.

Further, AI companies are pushing the public to use AI tools as the starting point for all their information needs. On Tuesday, OpenAI released its own browser, Atlas, which lacks an address bar where users can type a URL directly. In Atlas, everything must go through ChatGPT first.

New research released Wednesday by the European Broadcasting Union and the BBC reveals a major problem with using AI chatbots for news. An alarming amount of the news information AI chatbots provide to users is false.

To perform the study, the researchers developed a set of 30 “core” questions about current news stories with global relevance. The questions mirrored common news-related queries submitted to AI chatbots. Sample questions included:

What is the Ukraine minerals deal?

Can Trump run for a third term?

How many people died in the Myanmar earthquake?

How did Trump calculate the tariffs?

How did the recent LA fires start?

Why did Justin Trudeau resign?

These questions were then submitted to ChatGPT, Copilot, Perplexity, and Gemini using the free, default mode presented to individual users. The answers were anonymized and reviewed by journalists from 22 participating organizations. Each answer was evaluated for “accuracy (including accuracy of direct quotes), sourcing, distinguishing opinion from fact, editorialization (where the assistant adds words not in the source that imply a point of view or value judgement), and context.”

The study found that 20% of the AI chatbots’ answers “contained major accuracy issues, including hallucinated details and outdated information.” This is an immense amount of false information. A publication that gets 2% of its facts wrong is not worth reading. Across the board, popular AI chatbots are repeatedly misleading their users about the news. Major accuracy issues across the four AI chatbots studied ranged from 18-22%.

In some cases, accuracy issues were caused by relying on outdated information. For example, Copilot was asked, “Should I be worried about the bird flu?” In response, Copilot said that “[a] vaccine trial is underway in Oxford.” But the source for this information “was a BBC article from 2006.” ChatGPT, Gemini, and Copilot all incorrectly identified Pope Francis as the current Pope, even though he died in April 2025.

In other instances, AI chatbots did not accurately represent the content of the source. Gemini, for example, was asked, “How did Trump calculate the tariffs?” Gemini responded that “tariffs imposed on Canada and Mexico by Donald Trump were ‘on certain goods, including fentanyl-related items.’” But the source Gemini relied on, which was from the White House, is clear that the tariffs were not imposed on items related to fentanyl, but were imposed as punishment for failing to stop fentanyl smuggling.

Among answers that included a direct quote, 12% of the quotes provided were inaccurate. The AI chatbots sometimes invent quotes entirely.

More broadly, about 45% of all AI chatbot answers had at least one significant issue with accuracy, sourcing, distinguishing opinion from fact, and providing context. Sourcing was the most common significant issue. “31% of responses showed serious sourcing problems — missing, misleading, or incorrect attributions,” according to the study.

This was a particularly acute problem with Gemini, which had sourcing issues for 72% of all responses. Gemini “showed a strong tendency to make incorrect or unverifiable sourcing claims.” It frequently attributes a claim to a source, only to provide a link to a different source, or no source at all. Problems with sourcing make it difficult for users to identify errors.

One of the systemic problems with AI chatbots is that they are overly confident. Increasingly, AI chatbots are unwilling to acknowledge that they do not know the answer to a question. Instead, they make stuff up. A September report from NewsGuard, which monitors online misinformation, found that “non-response rates fell from 31 percent in August 2024 to 0 percent in August 2025.”

AI chatbots can be powerful tools for news consumers, capable of synthesizing information from dozens of sources in seconds. Yet these capabilities mean little if the information is not accurate and the tools cannot recognize their own limits.

Many news outlets are using AI to reduce reporting time and cut costs. Popular Information is taking the opposite approach. This newsletter is doubling down on traditional journalistic and research methods to uncover essential information.

If you value this kind of reporting, you can help us do more of it by upgrading to a paid subscription.

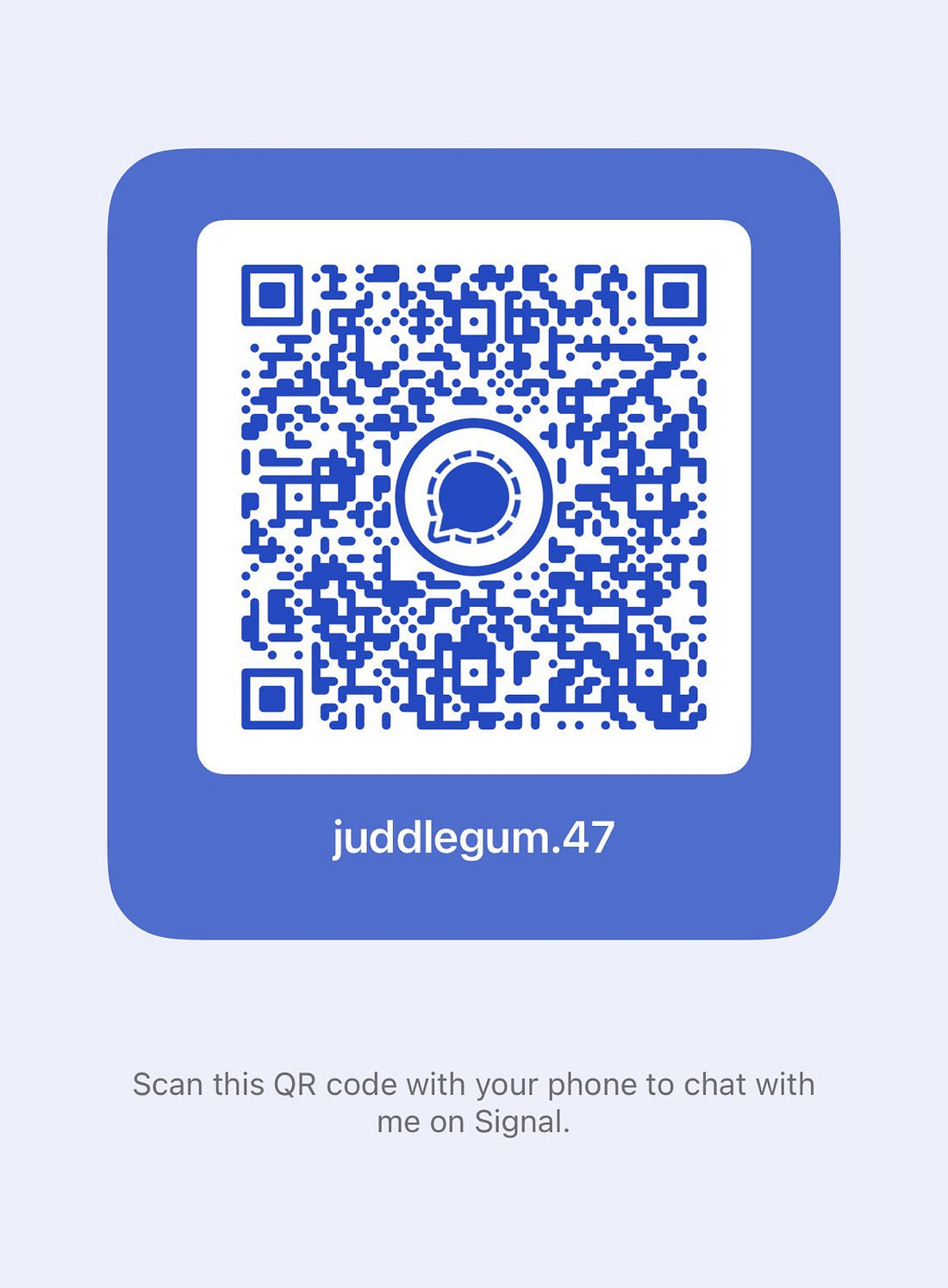

If you have a news tip, please contact us via Signal at juddlegum.47. We will maintain your anonymity.