|

Crane Worldwide Logistics Joins Streamfest 2025 — Hear Their Story (Sponsored)

Join us November 5-6 for Redpanda Streamfest, the two-day online event exploring the future of streaming data and real-time AI. Jared Noynaert, VP of Engineering at Crane Worldwide Logistics, will share insights on the fundamentals of modern data infrastructure — from isolation and auto-scaling to branching and serverless models that redefine how organizations scale. It’s a forward-looking look at the principles shaping next-generation architectures and where Redpanda and the broader Kafka ecosystem fit in. Streamfest features keynotes, demos, and case studies from engineers building the systems of tomorrow.

Disclaimer: The details in this post have been derived from the details shared online by the Nubank Engineering Team. All credit for the technical details goes to the Nubank Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

When a company grows rapidly, its technical systems are often pushed to their limits.

This is exactly what happened with Nubank, one of the largest digital banks in the world. As the company scaled its operations, the logging platform it relied on began to show serious cracks. Logging may sound like a small part of a tech stack, but it plays a critical role. Every time a service runs, it generates logs that help engineers understand what is happening behind the scenes. These logs are essential for monitoring systems, fixing problems quickly, and keeping the platform stable.

Nubank was using an external vendor to handle its log ingestion and storage. Over time, this setup became both costly and difficult to manage. The engineering team had very limited visibility into how logs were collected and stored, which made troubleshooting production issues harder. If something went wrong, they could not always trust that the logs they needed would be available.

Costs were also rising fast, with no clear way to predict future spending. The only way to handle more load was to pay more money. On top of that, important tools like alerts and dashboards were tied directly to the vendor’s platform, making it difficult to change providers. Finally, whenever log ingestion spiked, it would slow down the querying process, affecting incident response.

These challenges pushed Nubank to build its own in-house logging platform to take back control, reduce costs, and ensure a stable foundation for its fast-growing systems.

In this article, we will look at how Nubank built its in-house logging platform and the challenges the team faced.

The Initial Logging Architecture

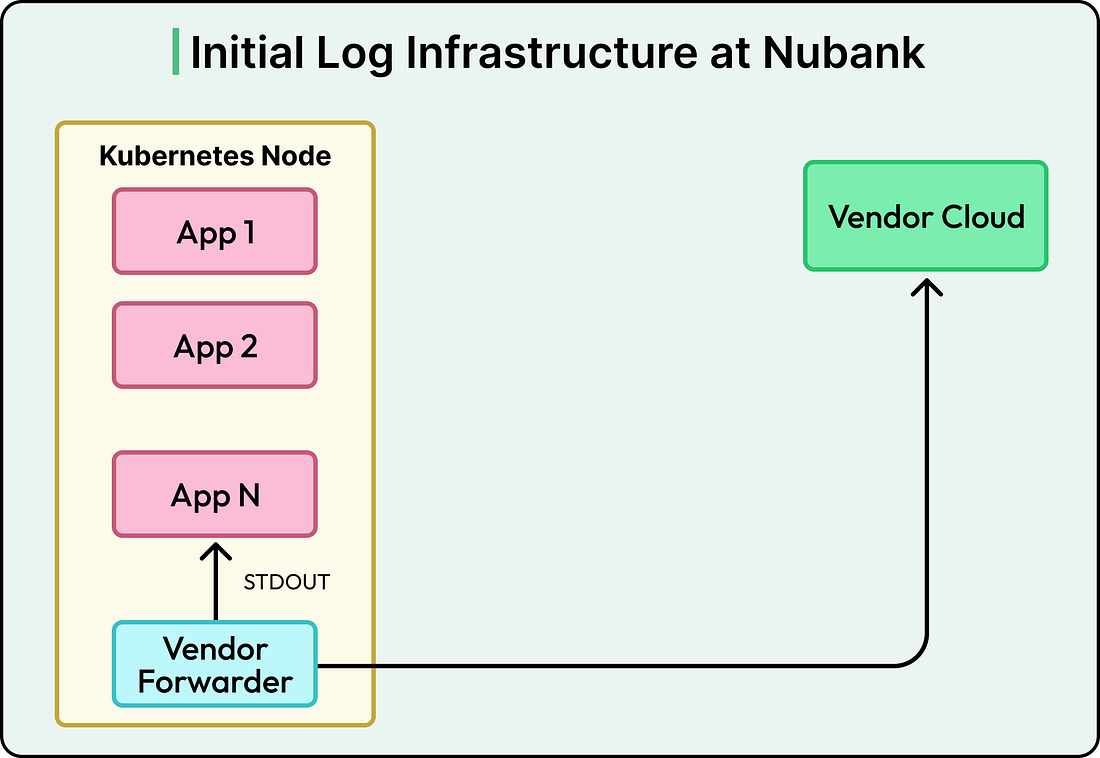

Before building its own logging platform, Nubank relied entirely on a third-party vendor.

The setup was straightforward. Every application inside the company sends its logs directly to the vendor’s forwarder or API. This worked well in the early days because the number of services was smaller, the log volume was manageable, and the system was simple to maintain.

However, as the company grew, this basic setup began to fall apart due to the following reasons:

The first problem was the lack of control over incoming data. Logs were sent as they were generated, with no way to filter out unnecessary information or route different kinds of logs to different places. This meant the system was processing a lot of data that didn’t always add value, driving up costs.

Another major issue was limited visibility into the logging pipeline. If something failed inside the vendor’s platform, the engineering team had little insight into where or why it happened. These “blind spots” made it difficult to detect problems early or trust that logs were being handled correctly.

The costs were also growing quickly. As more applications were added, the volume of logs increased, and so did the bills. The system offered no easy way to optimize or reduce those costs.

Finally, the architecture itself was rigid. Because everything was tightly tied to the vendor, Nubank could not easily change how logs were ingested, stored, or queried.

Two-Phase Platform Strategy

To solve its growing challenges, the Nubank engineering team decided not to build everything at once. Instead, they split the project into two clear phases.

The first phase was called the Observability Stream. This part focused on ingesting and processing logs. In other words, this involved collecting data from thousands of applications and preparing it for storage. The team wanted a platform that could handle large amounts of incoming data reliably and efficiently. It also needed to be flexible enough to apply filters, process different data types, and generate useful metrics.

The second phase was the Query and Storage Platform. Once logs were collected and processed, they needed to be stored in a way that made them easy to search. Engineers use logs daily to investigate incidents, debug issues, and understand system behavior. This platform had to support fast queries, store data at low cost, and remain scalable as Nubank’s infrastructure kept expanding.

This two-phased approach made the migration smoother and allowed them to test and improve each part before moving on. The goal was to create a complete, in-house log management system that could grow with Nubank’s massive scale.

Both phases were designed with three key goals in mind:

Reliability: The platform had to keep working smoothly even during spikes in usage or unexpected failures.

Scalability: It needed to handle both short-term traffic bursts and long-term growth.

Cost efficiency: The solution had to be significantly cheaper than commercial vendors while providing full control and visibility.

With these guiding principles, Nubank began building the new logging platform from the ground up.

Ingestion Pipeline

The Nubank engineering team began by focusing on the first phase of their new platform: the ingestion pipeline, also known as the Observability Stream.

This part of the system is responsible for collecting logs from many different applications, processing them in a structured way, and preparing them for storage. In their old setup, ingestion and querying were tightly connected, which meant problems in one area could affect the other. By separating the two, Nubank gained the ability to scale and manage each part independently.