|

Which LLM is best for your SLDC? (Sponsored)

Think the newest LLM is best for coding? Sonar put leading SOTA models to the test and unveiled insights on GPT-5, Claude Sonnet 4, and Llama 3 in their new expanded analysis. The results may surprise you.

Discover details on:

The shared strengths and flaws of LLMs

Coding archetypes for the leading LLMs

Hidden quality & security risks of using LLMs for code generation

How to select the best model for your needs

A “trust and verify” blueprint to integrate AI safely into your workflow

Download the report today or watch the on-demand webinar for insights to manage your AI coding strategy for scale, quality, and security.

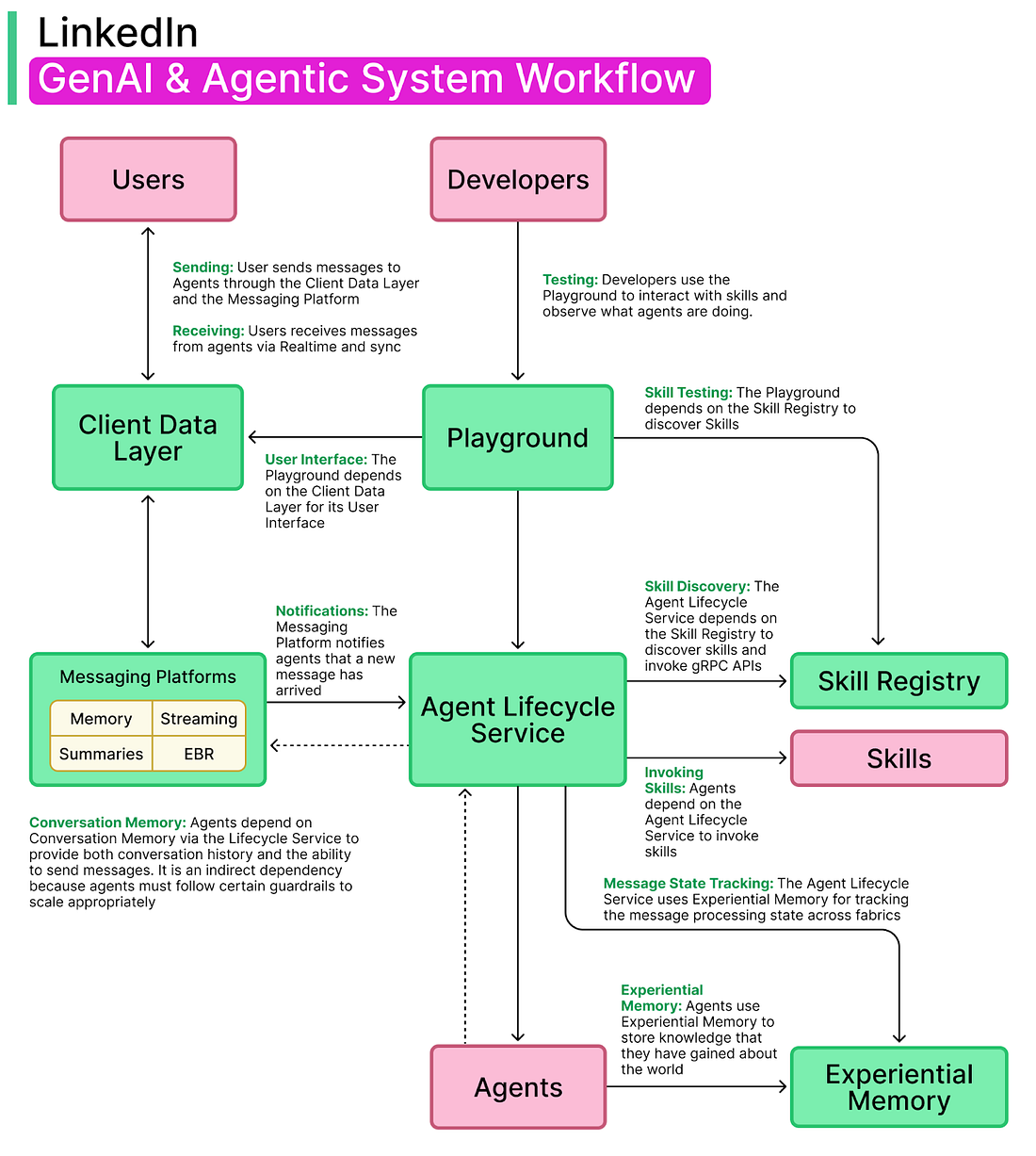

Note: This article is written in collaboration with the engineering team of LinkedIn. Special thanks to Karthik Ramgopal, a Distinguished Engineer from the LinkedIn engineering team, for helping us understand the GenAI architecture at LinkedIn. All credit for the technical details and diagrams shared in this article goes to the LinkedIn Engineering Team.

Over the past two years, LinkedIn has undergone a rapid transformation in how it builds and ships AI-powered products.

What began with a handful of GenAI features, such as collaborative articles, AI-assisted Recruiter capabilities, and AI-powered insights for members and customers, has evolved into a comprehensive platform strategy that now involves multiple products. One of the most prominent examples of this shift is Hiring Assistant, LinkedIn’s first large-scale AI agent for recruiters, designed to help streamline candidate sourcing and engagement.

Behind these product launches was a clear motivation to scale GenAI use cases efficiently and responsibly across LinkedIn’s ecosystem.

Early GenAI experiments were valuable but siloed because each product team built its own scaffolding for prompts, model calls, and memory management. This fragmented approach made it difficult to maintain consistency, slowed iteration, and risked duplicating efforts. As adoption grew, the engineering organization recognized the need for a unified GenAI application stack that could serve as the foundation for all future AI-driven initiatives.

The goals of this platform were as follows:

Teams needed to move fast to capitalize on GenAI momentum.

Engineers across LinkedIn should be able to build GenAI features without reinventing basic infrastructure each time.

Trust, privacy, and safety guardrails had to be baked into the stack.

In this article, we look at how LinkedIn’s engineering teams met these goals while building the GenAI setup. We’ll explore how they transitioned from early feature experiments to a robust GenAI platform, and eventually to multi-agent systems capable of reasoning, planning, and collaborating at scale. Along the way, we’ll highlight architectural decisions, platform abstractions, developer tooling, and real insights from the team behind the stack.

Foundations of the GenAI Application Stack

Before LinkedIn could build sophisticated AI agents, it had to establish the right engineering foundations.

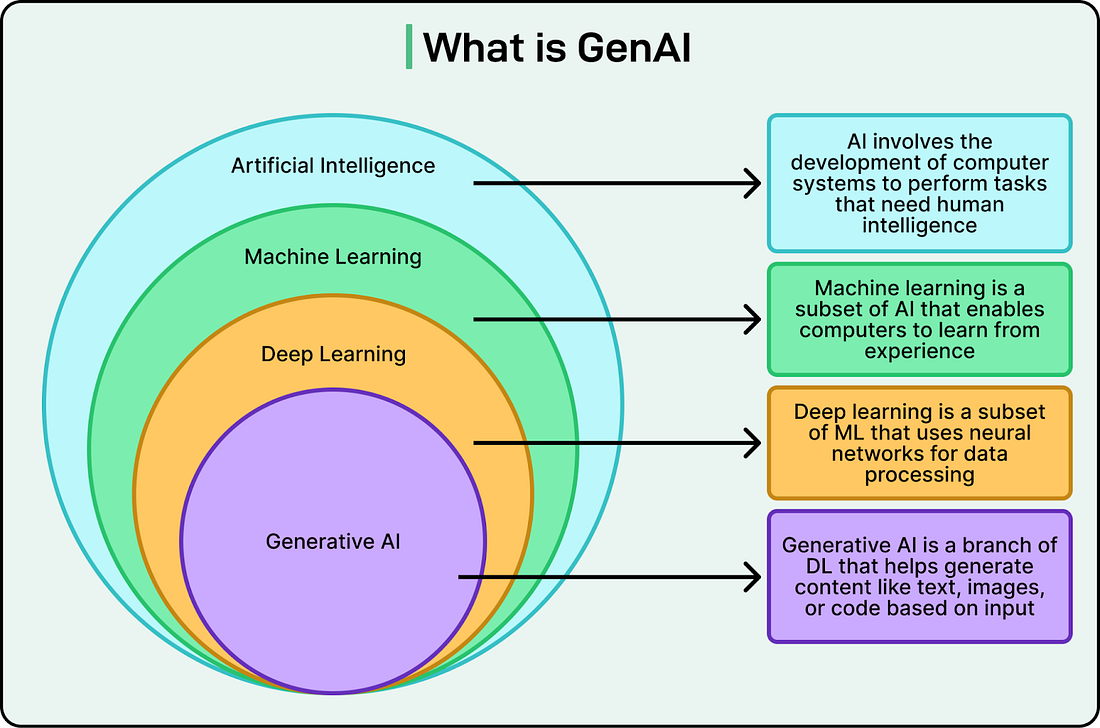

During 2023 and 2024, LinkedIn focused on creating a unified GenAI application stack that could support fast experimentation while remaining stable and scalable. GenAI is a branch of Deep Learning that helps generate content like text, images, or code based on input. The diagram below shows how it relates to other areas like AI and Machine Learning.

1 - The Language and Framework Shift

When LinkedIn started shipping GenAI features, the engineering stack was fragmented.

Online production systems were almost entirely written in Java, while offline GenAI experimentation, including prompt engineering and model evaluation, was taking place in Python. This created constant friction. Engineers had to translate ideas from Python into Java to deploy them, which slowed experimentation and introduced inconsistencies.

To solve this, LinkedIn made a decisive shift to use Python as a first-class language for both offline and online GenAI development. There were three main reasons behind this move:

Ecosystem: Most open-source GenAI tools, frameworks, and model libraries evolve faster in Python.

Developer familiarity: AI engineers were already comfortable building and iterating in Python.

Avoiding duplication: A single language meant fewer translation steps and reduced maintenance overhead.

After internal discussions and some healthy debate, the team created prototypes that demonstrated Python’s velocity advantages. These early wins helped build confidence across the organization, even among teams that had spent years in the Java ecosystem.

However, moving to Python did not mean rewriting all infrastructure at once. Instead, LinkedIn focused on incremental steps, such as:

gRPC over Rest.li: LinkedIn was already planning to transition its RPC layer from Rest.li to gRPC. The GenAI stack aligned with this direction and prioritized gRPC support for Python clients.

REST proxy for storage: Instead of building a native Python client for Espresso (LinkedIn’s distributed document store), the team used an existing REST proxy. This simplified the work while still allowing GenAI apps to interact with data.