|

|

Maps and models for Solo Chiefs navigating sole accountability in the age of AI.

Clayton Christensen’s framework lives inside Claude now. I don’t even need to understand it.

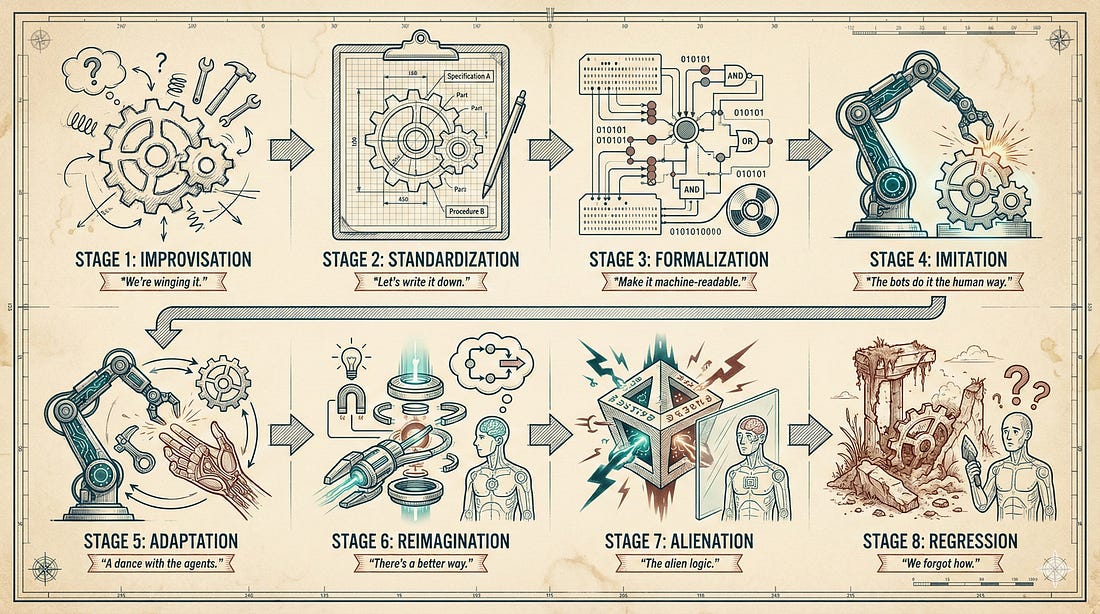

The Tragedy of the Agents maps eight stages from human improvisation to machine alienation. Understanding where you are determines whether you keep agency or lose it.

Last week I had eight conversations with readers of this Substack. Solo Chiefs, all of them, each carrying the single wringable neck in their own eclectic ways. My goal was to refine the Job-to-Be-Done for my writing: what problems they actually have, what they need, what keeps them up at night.

The meetings were genuinely useful. I’m committing to do them more often.

But here’s what hit me afterward, the thing I can’t stop thinking about: I never actually used the JTBD framework myself.

I downloaded the meeting transcripts and stored them on Google Drive. I grabbed a JTBD skill from the skills marketplace and asked Claude to analyze everything I discussed with my readers. Claude went through the rituals (functional needs, emotional needs, social needs, the whole shebang). Then it generated a report and I asked it to turn that report into a new skill optimized for my Substack: a “JTBD evaluator” that can analyze any future article draft and tell me how to better align it with my readers’ pains and gains.

Twenty minutes of work. Maybe less.

And I sat there realizing: Clayton Christensen’s framework stopped living in my head. It lives inside Claude now. I don’t even need to know how it works. Claude performed the analysis the human way, using human language, following human-designed patterns and protocols. I was just the orchestrator giving the agent a task to complete. Skills are the new patterns and practices.

And that’s the unsettling part. When the patterns and practices live in the agent instead of in me, what happens to my judgment? What happens when the agent gets it wrong and I can’t even tell?

Skills are the new patterns and practices.

The AlphaZero Moment Is Near

You’ve probably noticed the noise around Moltbook last week. (It’s a social network where AI agents talk to each other, in case you stuck your head in a book for a few hours too long.)

The viral popularity of Moltbook offers us a glimpse of something fascinating. AI agents have now started to share best practices with each other. They’re developing their own shorthand. Their own optimizations. Soon, they may even lock us humans out by creating their own native language.

Claude may not need the human-made JTBD framework to analyze user needs. It’ll figure out better ways. It may invent approaches that don’t require human practices or human concepts at all. Just like AlphaZero figured out a better way to play chess that had nothing to do with centuries of human strategy.

Just like AlphaZero figured out a better way to play chess that had nothing to do with centuries of human strategy.

This realization sent me down a rabbit hole. Together with my team of AIs (yes, I outsource thinking about AI to the AIs, because I love reflexivity and going meta), I came up with a model for the progression of automation dependency. I call it the Tragedy of the Agents.

It’s not a maturity model, though it looks like one.

It’s more like a drama unfolding like a roadmap.

You’re reading The Maverick Mapmaker—maps and models for Solo Chiefs navigating sole accountability in the age of AI. All posts are free, always. Paying supporters keep it that way (and get a full-color PDF of Human Robot Agent plus other monthly extras as a thank-you)—for just one café latte per month. Subscribe or upgrade.